So a while back someone asked me if it’s possible to monitor Azure File Shares. the reason for this question was due to a crashing WVD farm because the Azure File Share reached its quota real quick. After some consideration and tests I’ve first tried to use the connection string to extract this data but figured that was a fairly futile attempt.

Instead, I’ve used the Secure Application Model and Azure Lighthouse to connect to all tenants, and compare the quota used vs the actual usage, that way it becomes fairly easy to alert on any Azure Storage that’s running out of space. This script is designed to loop through all your tenants, but can easily be modified to do a single tenant too.

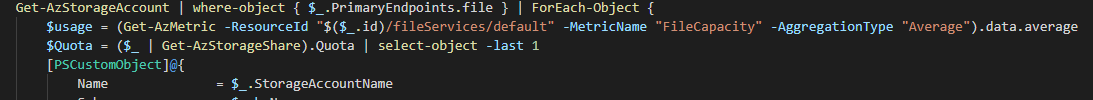

The Script

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

|

######### Secrets #########

$ApplicationId = 'ApplicationID'

$ApplicationSecret = 'ApplicationSecret' | ConvertTo-SecureString -Force -AsPlainText

$TenantID = 'YourTenantID'

$RefreshToken = 'Refreshtoken'

$UPN = "A-Valid-UPN"

$MinimumFreeGB = '50'

######### Secrets #########

$credential = New-Object System.Management.Automation.PSCredential($ApplicationId, $ApplicationSecret)

Try {

$azureToken = New-PartnerAccessToken -ApplicationId $ApplicationID -Credential $credential -RefreshToken $refreshToken -Scopes 'https://management.azure.com/user_impersonation' -ServicePrincipal -Tenant $TenantId

$graphToken = New-PartnerAccessToken -ApplicationId $ApplicationId -Credential $credential -RefreshToken $refreshToken -Scopes 'https://graph.microsoft.com/.default'

}

catch {

write-DRMMAlert "Could not get tokens: $($\_.Exception.Message)"

exit 1

}

Connect-Azaccount -AccessToken $azureToken.AccessToken -GraphAccessToken $graphToken.AccessToken -AccountId $upn -TenantId $tenantID

$Subscriptions = Get-AzSubscription | Where-Object { $_.State -eq 'Enabled' } | Sort-Object -Unique -Property Id

$fileShares = foreach ($Sub in $Subscriptions) {

$null = $sub | Set-AzContext

Get-AzStorageAccount | where-object { $_.PrimaryEndpoints.file } | ForEach-Object {

$usage = (Get-AzMetric -ResourceId "$($_.id)/fileServices/default" -MetricName "FileCapacity" -AggregationType "Average").data.average

$Quota = ($_ | Get-AzStorageShare).Quota | select-object -last 1

[PSCustomObject]@{

Name = $_.StorageAccountName

Sub = $sub.Name

ResourceGroupName = $_.StorageAccountName

PrimaryLocation = $_.PrimaryLocation

'Quota GB' = [int64]$Quota

'usage GB' = [math]::round($usage / 1024 / 1024 / 1024)

}

}

}

$QuotaReached = $fileShares | Where-Object { $_.'quota GB' - $_.'usage GB' -lt $MinimumFreeGB -and $\_.'Quota gb' -ne 0 }

if (!$QuotaReached) {

write-host 'Healthy'

}

else {

write-host "Unhealthy. Please check diagnostic data"

$QuotaReached

}

|

and that’s it! as always, Happy PowerShelling.