We collab with

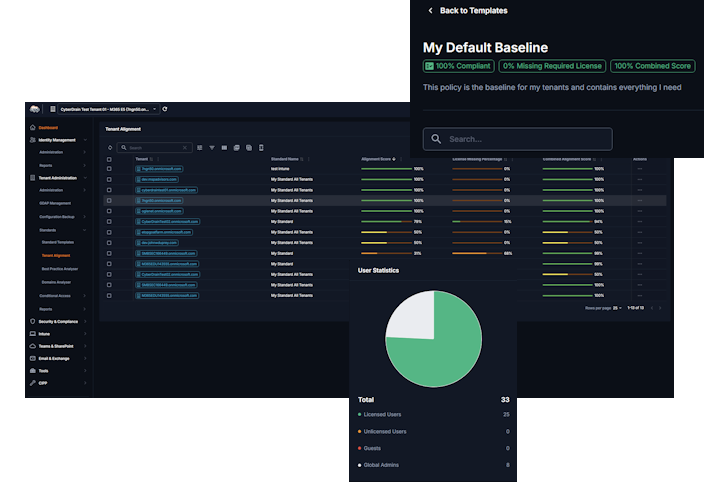

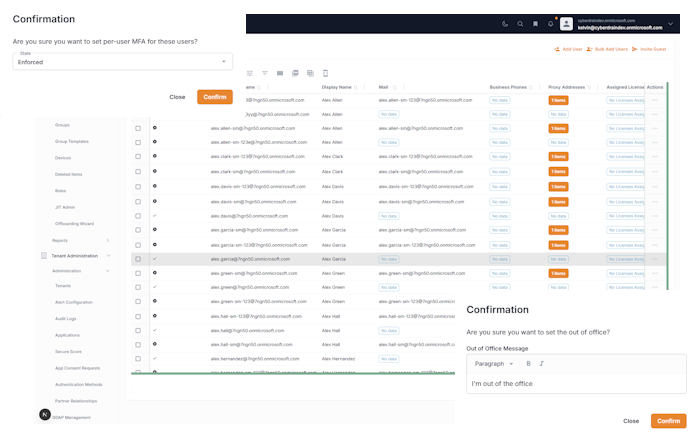

The Pain We Actually Solve

Multi-tenant management across dozens of Microsoft portals, GDAP assignments and permission headaches, repetitive user provisioning and license management, Graph API complexity without PowerShell mastery, Conditional Access policy deployment at scale, security compliance across all client tenants, and warranty updates for all your devices. That’s a lot to solve, we know. We also want to make sure you find belonging in our community. Its not just about selling you tools, we bring you to the next level of your career.

Where the Real Work Happens

Real-time support from fellow MSPs, troubleshooting help, and direct access to the development team. Open-source CIPP platform and API - contribute code, report issues, and shape the roadmap with real feedback.

8000+

Community

Members

6000+

GitHub

Forks

150+

Active

Contributors

Ready to Stop Fighting Microsoft Portals?

Join thousands of MSPs who’ve ditched the multi-tab nightmare for CIPP’s unified automation. Built by the community, for the community.

Community by CyberDrain

CyberDrain exists to solve the real, daily pain for MSPs. No bloated vendors, no half-baked tools - just community-powered automation that actually works.

- Primary hub for MSP community support and troubleshooting

- Direct access to development team and real-time feedback

- Open-source platform with active community contributions

- Comprehensive documentation and MSP best practices

- Always up-to-date resources and guides

What Our Community Says

Real feedback from MSPs who use our products daily to manage their environments and automate their workflows.

The Cyberdrain Improved Partner Portal is a testament to the power of simplicity. With its straightforward design and robust functionality, managing multiple Microsoft 365 tenants has become a breeze. Thanks to CIPP!

CIPP has touched every facet of our business - from Solutions Architects to Support to Strategic Advisors - in an incredibly beneficial way.

We manage more than 1000 tenants, the partner portal limits us in each way. CIPP allows us to break through those limits and make sure we can manage all of our tenants

Not only does CIPP save us time, but it also our team to be far more efficient with our offboards and tenant hopping between the various services without the need to use our Break Glass Account. The team loves it! One process alone saves us over 8 mins per ticket. If you have not checked out CIPP, you are missing out.

I would highly recommend the CyberDrain Partner Portal to any IT professional who is looking for a comprehensive, user-friendly platform that can help them manage their business more effectively. The level of support and collaboration that it offers is truly unparalleled, and I have no doubt that it will continue to be an essential tool for me in the years to come.

CIPP allows us to perform multi-tenant reporting and actions in a way unmatched by other solutions. The pace of innovation with CIPP means we see new functionality in weeks or months rather than years.

When it comes to managing service desks, we’ve always sought out innovative tools and approaches to streamline our processes. That’s why we were thrilled when we discovered CIPP, a game-changing solution that has completely revolutionized the way we run our service desk.

I mean, have you ever seen a platform that can turn back time? Cause CIPP can… It’s like a DeLorean, but instead of going 88 mph, it goes 88% faster in task resolution time. And the best part? It’s powered by fairy dust and rainbows. Okay, maybe not really, but that’s how it feels.